Beyond Containers: How Docker Offload Supercharges DevOps with Cloud AI

Unlocking the Future of AI-Driven DevOps Without Sacrificing Your Laptop (or Sanity)

AI is no longer a topic reserved for data scientists and researchers—it's transforming how DevOps teams build, test, and ship applications. With large models and increasingly compute-heavy tasks, a modern developer’s laptop often isn’t enough. Enter Docker Offload and Docker Model Runner—tools designed to make AI experiments and production workflows seamless, scalable, and accessible for every DevOps engineer.

What Is Docker Offload?

Docker Offload is a managed service that lets you shift (or “offload”) your container builds and runtime workloads—including resource-hungry AI models—into the cloud, all while using the familiar Docker CLI and Desktop tools you already love. This means you can:

Run containers and build images remotely with just a toggle or command, no complex setup required1234.

Access high-performance infrastructure and powerful GPUs on-demand—even from a low-powered laptop.

Preserve your local dev experience: features like port forwarding and bind mounts feel local, even when actual computing happens in the cloud.

Why Should DevOps Care?

If you’re tired of your laptop begging for mercy during heavy builds or want to deploy models bigger than your local GPU, Docker Offload is a literal gamechanger. Its benefits include:

Unlimited Power: Instantly run models and builds on remote machines with NVIDIA L4 GPUs for ML, LLMs, and agentic apps without hardware upgrades.

Consistent Environments: Guarantee that every teammate or CI/CD server uses the exact same setup—no more “works on my machine” nightmares24.

Speed and Efficiency: Accelerate feedback loops in development, testing, and deployment pipelines.

Cost Control: Only pay for cloud resources when you need them. Ephemeral cloud sessions automatically shut down, so you’re never billed for idle time.

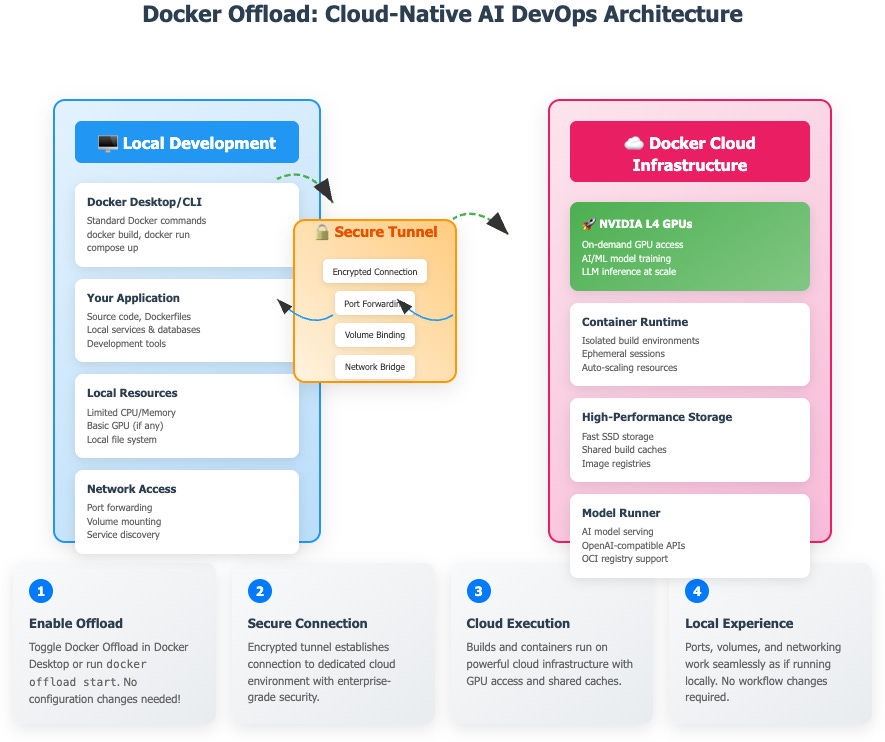

How Docker Offload Works

Here’s how the workflow looks for a DevOps practitioner:

Start Offload: Enable Docker Offload in Docker Desktop or run

docker offload start.Cloud Connection: Your Docker CLI or Desktop establishes a secure, encrypted tunnel to a dedicated cloud environment managed by Docker256.

Resource Scaling: All builds & container runs execute in the cloud with on-demand GPU, fast storage, and shared caches.

Local-Like Experience: Ports, volumes, and development experience remain the same—your applications can still talk to services on your machine and vice versa.

Conceptual Workflow of Docker Offload for DevOps

Docker Model Runner: Running AI Models, Docker-Style

For DevOps teams experimenting with AI, Docker Model Runner is built to make running and serving AI models as easy as launching a container. Key highlights:

Pull, Run, Serve: Use Docker commands to fetch, run, and serve LLMs and other models via APIs compatible with OpenAI.

Direct Hardware Access: On supported systems (like Apple Silicon or NVIDIA GPUs), models run as host-native processes for high performance without virtualization78910.

Flexible Integration: Serve models from OCI-compliant registries; integrate easily into microservices or CI/CD workflows.

Consistent Management: Keep models as part of your Docker Compose files, version control, and deployment scripts.

Real-World Impact for DevOps

Before Docker Offload

Devs spend countless hours troubleshooting build environments, dependency conflicts, or waiting for local builds/tests to finish.

Many organizations avoid heavy model experimentation due to hardware limitations or inconsistent setups.

After Docker Offload

Massive models (think LLMs or multi-agent AI apps) run with a single command, using cloud GPUs.

Teams enjoy faster iterations, more robust CI/CD, and can confidently run experiments or ship new AI services without worrying about local resources1112.

Use Cases Typical for DevOps Teams

Automated CI/CD for AI-Powered Apps: Build, test, and deploy containerized AI models in the cloud as part of automated pipelines, ensuring consistent production releases13.

Microservices with Embedded AI: Define AI models as part of your stack in

docker-compose.yamland run everything together, locally or in the cloud1415.Experimentation & Collaboration: Share offloaded environments so everyone gets identical dev sandboxes—great for onboarding, troubleshooting, or hackathons.

How to Get Started

Install/Update Docker Desktop: Version 4.43+ is required for Offload and Model Runner.

Enable Offload in Settings: Activate cloud offload and GPU support if needed.

Run Your Workloads: Use your regular Docker commands (

build,run,compose up), but computation happens in the cloud.Toggle On/Off as Needed: When offload is stopped, Docker goes back to using your local machine—no reconfiguration required!

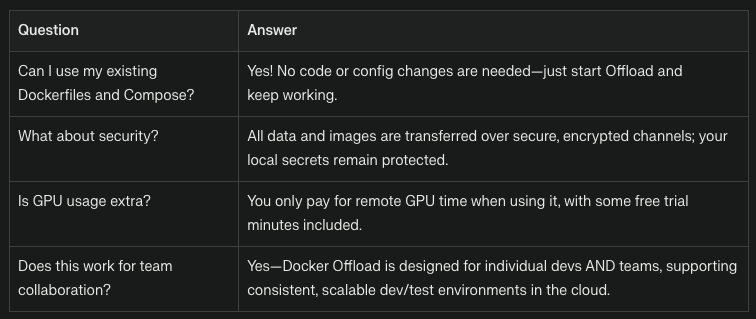

Common Questions

Conclusion: The Future of DevOps + AI

The gap between application development and AI innovation is shrinking fast. Docker Offload and Docker Model Runner deliver on the promise of making AI native to everyday DevOps workflows—turning even the tiniest laptop into an AI powerhouse by “borrowing” the cloud’s muscle only when you need it. DevOps teams can now experiment, deploy, and iterate at the speed of modern AI, without worrying about hardware bottlenecks or inconsistent builds.

Welcome to frictionless, scalable, AI-enabled DevOps.

Ready to run your first AI-accelerated build? Toggle on Docker Offload and see the cloud become part of your local dev toolkit.